Economic development organizations are operating in one of the most complex environments in decades. Small businesses are navigating inflation, constrained access to capital, workforce shortages, regulatory complexity, and rapid technological change. At the same time, public agencies, nonprofits, and ecosystem builders are being asked to do more with fewer resources, tighter budgets, and higher accountability standards.

At the center of this challenge sits a persistent and often underestimated issue: small business data is fragmented, inconsistent, and difficult to translate into meaningful impact measurement.

Organizing small business data effectively is no longer a back-office concern. It is a strategic capability that determines whether an organization can deliver programs efficiently, comply with funding requirements, demonstrate outcomes, and continuously improve how it supports entrepreneurs.

This article outlines a practical, scalable approach for economic developers to organize small business data in a way that simplifies reporting, strengthens compliance, and enables credible, real-time impact measurement.

The Reality: Drowning in Data, Starving for Insight

Most economic development organizations are not lacking data. They are overwhelmed by it.

Client intake forms live in one system. Training attendance is tracked in spreadsheets. Capital deployment data exists elsewhere. Technical assistance hours are logged manually. Impact metrics are reconstructed at reporting time.

Each new program, funding source, or reporting requirement adds another layer of complexity. Over time, organizations accumulate a patchwork of tools and processes that were never designed to work together.

The consequences are well known:

- Reporting becomes manual, slow, and error-prone

- Staff spend excessive time cleaning and reconciling data

- Leadership lacks real-time visibility into performance

- Compliance feels reactive rather than embedded

- Impact narratives are difficult to substantiate with evidence

This is not a failure of effort or intent. It is a structural data problem.

Why Organizing Small Business Data Matters More Than Ever

The expectations placed on economic development organizations have changed fundamentally. Stakeholders increasingly demand outcomes, not just activities.

Funders, regulators, and policymakers want clear answers to questions such as:

- Who was served?

- What services were delivered?

- What outcomes were achieved?

- How many jobs were created or retained?

- How much capital was deployed or leveraged?

- Are programs reaching intended populations and geographies?

Federal, state, and foundation-funded initiatives—such as SSBCI, ARPA, MBDA programs, SBA-funded efforts, and state or local grant programs—require structured, auditable, and repeatable reporting.

When data is not organized at the source, reporting becomes a costly, stressful exercise that diverts teams from mission-critical work. When data is organized correctly, reporting becomes a natural output of day-to-day operations.

A Foundational Shift: Design Data Around the Small Business

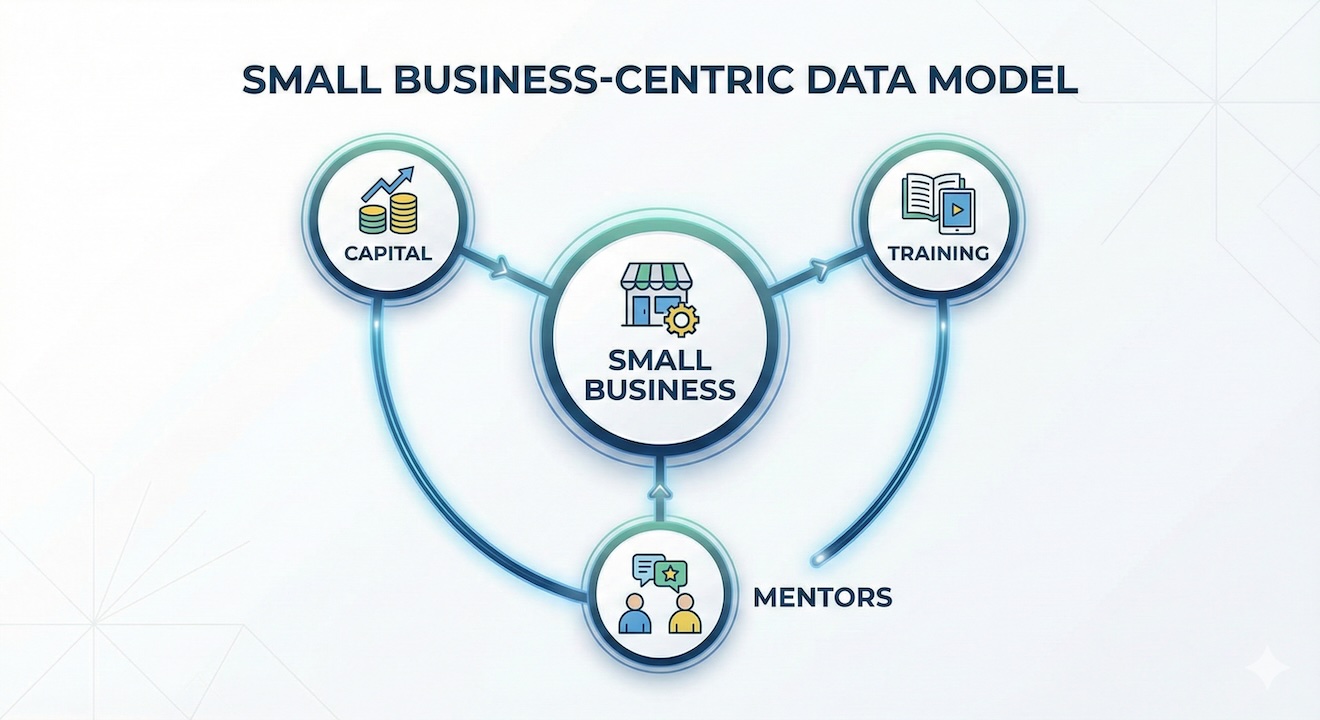

One of the most important changes economic developers can make is moving away from program-centric data toward small business–centric data.

In many organizations, data is structured around individual programs:

- Each program has its own intake

- Each program tracks participants independently

- Outcomes are measured differently across initiatives

Small businesses, however, do not experience an ecosystem as a series of isolated programs. They experience it as a journey. Over time, they may receive training, advising, capital, referrals, and follow-on support across multiple initiatives.

Organizing data around the small business means:

- Maintaining one core business profile

- Assigning a persistent unique identifier

- Tracking engagement longitudinally across programs

- Measuring outcomes over time, not just at program exit

This shift dramatically improves data quality, reduces duplication, and creates a more accurate picture of impact.

Step 1: Standardize Intake and Client Identity

Effective data organization begins at intake.

If intake processes are inconsistent, duplicated, or incomplete, every downstream report will suffer. A standardized intake process establishes a single source of truth for business and participant information.

Key principles of strong intake design include:

- A unified intake experience across programs where feasible

- Clear definitions of required versus optional fields

- Consistent use of demographic and business classifications

- Built-in validation to reduce errors and omissions

- Explicit consent and privacy controls

Equally important is the creation of a unique client or business identifier. This allows organizations to track engagement, services, and outcomes over time—even when businesses move between programs or re-engage years later.

Step 2: Capture Program Activities as Structured Data

Many organizations still treat program activity as narrative notes or informal records. While qualitative context has value, it must be paired with structured data to support reporting and analysis.

Structured activity tracking typically includes:

- Type of service delivered (training, advising, capital access, referral)

- Duration and intensity of support

- Program and funding source attribution

- Dates, milestones, and completion status

- Staff, contractor, or partner involvement

When activities are captured consistently, organizations can easily answer questions such as:

- How much technical assistance was delivered?

- Which services correlate with stronger outcomes?

- Which programs are most effective for specific business segments?

Step 3: Embed Outcomes into Operational Workflows

A common mistake is treating outcomes as something to measure only at the end of a program or reporting period. This approach makes impact measurement reactive and unreliable.

Leading organizations embed outcomes into their workflows from the beginning:

- Outcomes are defined at intake or enrollment

- Progress is tracked at meaningful checkpoints

- Standard definitions are used for jobs, revenue, capital, and growth

- Outcome metrics align with funder and regulatory requirements

When outcomes are integrated into daily operations, impact measurement becomes continuous and defensible rather than episodic and rushed.

Step 4: Align Data Models Across Programs and Funders

Most economic development organizations manage multiple programs simultaneously, each with distinct objectives and reporting obligations.

Rather than building separate systems for each initiative, high-performing organizations establish a common data model that supports multiple use cases.

A shared data model allows teams to:

- Reuse data across programs and reports

- Reduce duplication and inconsistencies

- Support multiple funders without re-entering information

- Launch new programs more quickly

This approach is particularly critical for organizations managing federal and state funding, where compliance, auditability, and traceability are essential.

Step 5: Automate Reporting Where Possible

Manual reporting is resource-intensive and unsustainable.

Once data is structured and governed correctly, reporting can be partially or fully automated. Automation does not eliminate the need for review or narrative interpretation, but it significantly reduces administrative burden.

Automated reporting enables:

- Real-time dashboards for leadership

- Program-level performance monitoring

- Funder-specific report generation

- Faster responses to ad hoc data requests

- Greater confidence in data accuracy

Most importantly, automation frees staff to focus on serving businesses rather than reconciling spreadsheets.

Step 6: Use Data to Improve Programs, Not Just Prove Impact

The highest value of organized data is not compliance—it is learning.

When small business data is well-structured, organizations can:

- Identify which services drive the strongest outcomes

- Detect gaps in reach, equity, or performance

- Adjust programs in near real time

- Allocate resources more strategically

- Strengthen coordination across partners

Data becomes a feedback loop that informs better program design and delivery.

From Reporting Systems to Learning Systems

The ultimate objective is not cleaner reports—it is the creation of a learning system for economic development.

A learning system continuously captures data, analyzes outcomes, and feeds insights back into strategy and operations. It allows organizations to adapt as business needs evolve and funding environments change.

In an era of heightened scrutiny and limited resources, organizations that master their data are better positioned to:

- Secure and retain funding

- Scale effective programs

- Build trust with stakeholders

- Demonstrate long-term economic impact

Who This Approach Serves

This framework applies broadly across the economic development ecosystem, including:

- State and local economic development agencies

- Small Business Development Centers (SBDCs)

- CDFIs and capital deployment organizations

- Nonprofits and foundations

- Universities, incubators, and accelerators

- Policymakers and program administrators

Any organization responsible for delivering small business programs, reporting outcomes, or demonstrating impact can benefit from rethinking how data is organized.

Closing Perspective

Organizing small business data is not about adding more tools or bureaucracy. It is about designing data flows that align with mission, operations, and accountability.

When data is organized intentionally:

- Reporting becomes simpler

- Impact becomes clearer

- Teams become more effective

- Small businesses receive better support

In today’s economic development landscape, the ability to organize, trust, and learn from data is not optional—it is foundational to delivering meaningful, measurable impact.

.jpg)